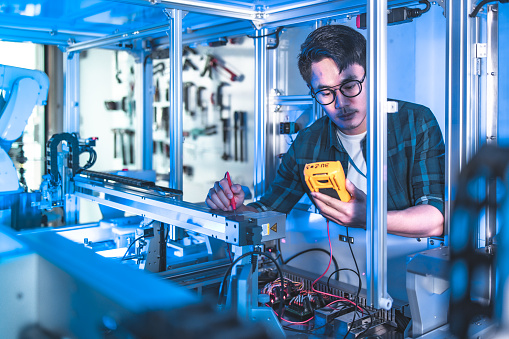

electronics, branch of physics and electrical engineering that deals with the emission, behaviour, and effects of electrons and with electronic devices.

Electronics encompasses an exceptionally broad range of technology. The term originally was applied to the study of electron behaviour and movement, particularly as observed in the first electron tubes. It came to be used in its broader sense with advances in knowledge about the fundamental nature of electrons and about the way in which the motion of these particles could be utilized. Today many scientific and technical disciplines deal with different aspects of electronics. Research in these fields has led to the development of such key devices as transistors, integrated circuits, lasers, and optical fibres. These in turn have made it possible to manufacture a wide array of electronic consumer, industrial, and military products. Indeed, it can be said that the world is in the midst of an electronic revolution at least as significant as the industrial revolution of the 19th century.

The history of electronics

The vacuum tube era

Theoretical and experimental studies of electricity during the 18th and 19th centuries led to the development of the first electrical machines and the beginning of the widespread use of electricity. The history of electronics began to evolve separately from that of electricity late in the 19th century with the identification of the electron by the English physicist Sir Joseph John Thomson and the measurement of its electric charge by the American physicist Robert A. Millikan in 1909.

At the time of Thomson’s work, the American inventor Thomas A. Edison had observed a bluish glow in some of his early lightbulbs under certain conditions and found that a current would flow from one electrode in the lamp to another if the second one (anode) were made positively charged with respect to the first (cathode). Work by Thomson and his students and by the English engineer John Ambrose Fleming revealed that this so-called Edison effect was the result of the emission of electrons from the cathode, the hot filament in the lamp. The motion of the electrons to the anode, a metal plate, constituted an electric current that would not exist if the anode were negatively charged.

This discovery provided impetus for the development of electron tubes, including an improved X-ray tube by the American engineer William D. Coolidge and Fleming’s thermionic valve (a two-electrode vacuum tube) for use in radio receivers. The detection of a radio signal, which is a very high-frequency alternating current (AC), requires that the signal be rectified; i.e., the alternating current must be converted into a direct current (DC) by a device that conducts only when the signal has one polarity but not when it has the other—precisely what Fleming’s valve (patented in 1904) did. Previously, radio signals were detected by various empirically developed devices such as the “cat whisker” detector, which was composed of a fine wire (the whisker) in delicate contact with the surface of a natural crystal of lead sulfide (galena) or some other semiconductor material. These devices were undependable, lacked sufficient sensitivity, and required constant adjustment of the whisker-to-crystal contact to produce the desired result. Yet these were the forerunners of today’s solid-state devices. The fact that crystal rectifiers worked at all encouraged scientists to continue studying them and gradually to obtain the fundamental understanding of the electrical properties of semiconducting materials necessary to permit the invention of the transistor.

In 1906 Lee De Forest, an American engineer, developed a type of vacuum tube that was capable of amplifying radio signals. De Forest added a grid of fine wire between the cathode and anode of the two-electrode thermionic valve constructed by Fleming. The new device, which De Forest dubbed the Audion (patented in 1907), was thus a three-electrode vacuum tube. In operation, the anode in such a vacuum tube is given a positive potential (positively biased) with respect to the cathode, while the grid is negatively biased. A large negative bias on the grid prevents any electrons emitted from the cathode from reaching the anode; however, because the grid is largely open space, a less negative bias permits some electrons to pass through it and reach the anode. Small variations in the grid potential can thus control large amounts of anode current.

The vacuum tube permitted the development of radio broadcasting, long-distance telephony, television, and the first electronic digital computers. These early electronic computers were, in fact, the largest vacuum-tube systems ever built. Perhaps the best-known representative is the ENIAC (Electronic Numerical Integrator and Computer), completed in 1946.

The special requirements of the many different applications of vacuum tubes led to numerous improvements, enabling them to handle large amounts of power, operate at very high frequencies, have greater than average reliability, or be made very compact (the size of a thimble). The cathode-ray tube, originally developed for displaying electrical waveforms on a screen for engineering measurements, evolved into the television picture tube. Such tubes operate by forming the electrons emitted from the cathode into a thin beam that impinges on a fluorescent screen at the end of the tube. The screen emits light that can be viewed from outside the tube. Deflecting the electron beam causes patterns of light to be produced on the screen, creating the desired optical images.

Notwithstanding the remarkable success of solid-state devices in most electronic applications, there are certain specialized functions that only vacuum tubes can perform. These usually involve operation at extremes of power or frequency.

Vacuum tubes are fragile and ultimately wear out in service. Failure occurs in normal usage either from the effects of repeated heating and cooling as equipment is switched on and off (thermal fatigue), which ultimately causes a physical fracture in some part of the interior structure of the tube, or from degradation of the properties of the cathode by residual gases in the tube. Vacuum tubes also take time (from a few seconds to several minutes) to “warm up” to operating temperature—an inconvenience at best and in some cases a serious limitation to their use. These shortcomings motivated scientists at Bell Laboratories to seek an alternative to the vacuum tube and led to the development of the transistor.

The semiconductor revolution

Invention of the transistor

The invention of the transistor in 1947 by John Bardeen, Walter H. Brattain, and William B. Shockley of the Bell research staff provided the first of a series of new devices with remarkable potential for expanding the utility of electronic equipment (see ). Transistors, along with such subsequent developments as integrated circuits, are made of crystalline solid materials called semiconductors, which have electrical properties that can be varied over an extremely wide range by the addition of minuscule quantities of other elements. The electric current in semiconductors is carried by electrons, which have a negative charge, and also by “holes,” analogous entities that carry a positive charge. The availability of two kinds of charge carriers in semiconductors is a valuable property exploited in many electronic devices made of such materials.

Early ICs contained about 10 individual components on a silicon chip 3 mm (0.12 inch) square. By 1970 the number was up to 1,000 on a chip of the same size at no increase in cost. Late in the following year the first microprocessor was introduced. The device contained all the arithmetic, logic, and control circuitry required to perform the functions of a computer’s central processing unit (CPU). This type of large-scale IC was developed by a team at Intel Corporation, the same company that also introduced the memory IC in 1971. The stage was now set for the computerization of small electronic equipment.

Until the microprocessor appeared on the scene, computers were essentially discrete pieces of equipment used primarily for data processing and scientific calculations. They ranged in size from minicomputers, comparable in dimensions to a small filing cabinet, to mainframesystems that could fill a large room. The microprocessor enabled computer engineers to develop microcomputers—systems about the size of a lunch box or smaller but with enough computing power to perform many kinds of business, industrial, and scientific tasks. Such systems made it possible to control a host of small instruments or devices (e.g., numerically controlled lathes and one-armed robotic devices for spot welding) by using standard components programmed to do a specific job. The very existence of computer hardware inside such devices is not apparent to the user.

The large demand for microprocessors generated by these initial applications led to high-volume production and a dramatic reduction in cost. This in turn promoted the use of the devices in many other applications—for example, in household appliances and automobiles, for which electronic controls had previously been too expensive to consider. Continued advances in IC technology gave rise to very large-scale integration (VLSI), which substantially increased the circuit density of microprocessors. These technological advances, coupled with further cost reductions stemming from improved manufacturing methods, made feasible the mass production of personal computers for use in offices, schools, and homes.

Digital electronics

Computers understand only two numbers, 0 and 1, and do all their arithmetic operations in this binary mode. Many electrical and electronic devices have two states: they are either off or on. A light switch is a familiar example, as are vacuum tubes and transistors. Because computers have been a major application for integrated circuits from their beginning, digital integrated circuits have become commonplace. It has thus become easy to design electronic systems that use digital language to control their functions and to communicate with other systems.

A major advantage in using digital methods is that the accuracy of a stream of digital signals can be verified, and, if necessary, errors can be corrected. In contrast, signals that vary in proportion to, say, the sound of an orchestra can be corrupted by “noise,” which once present cannot be removed. An example is the sound from a phonograph record, which always contains some extraneous sound from the surface of the recording groove even when the record is new. The noise becomes more pronounced with wear. Contrast this with the sound from a digital compact disc recording. No sound is heard that was not present in the recording studio. The disc and the player contain error-correcting features that remove any incorrect pulses (perhaps arising from dust on the disc) from the information as it is read from the disc.

As electronic systems become more complex, it is essential that errors produced by noise be removed; otherwise, the systems may malfunction. Many electronic systems are required to operate in electrically noisy environments, such as in an automobile. The only practical way to assure immunity from noise is to make such a system operate digitally. In principle it is possible to correct for any arbitrary number of errors, but in practice this may not be possible. The amount of extra information that must be handled to correct for large rates of error reduces the capacity of the system to handle the desired information, and so trade-offs are necessary.

A consequence of the veritable explosion in the number and kinds of electronic systems has been a sharp growth in the electrical noise level of the environment. Any electrical system generates some noise, and all electronic systems are to some degree susceptible to disturbance from noise. The noise may be conducted along wires connected to the system, or it may be radiated through the air. Care is necessary in the design of systems to limit the amount of noise that is generated and to shield the system properly to protect it from external noise sources.

Optoelectronics

A new direction in electronics employs photons (packets of light) instead of electrons. By common consent these new approaches are included in electronics, because the functions that are performed are, at least for the present, the same as those performed by electronic systems and because these functions usually are embedded in a largely electronic environment. This new direction is called optical electronics or optoelectronics.